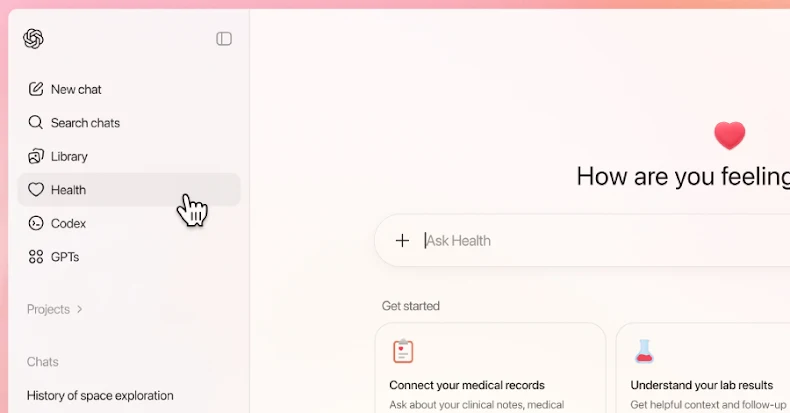

Artificial intelligence (AI) company OpenAI on Wednesday announced the launch of ChatGPT Health, a dedicated space that allows users to have conversations with the chatbot about their health.

To that end, the sandboxed experience offers users the optional ability to securely connect medical records and wellness apps, including Apple Health, Function, MyFitnessPal, Weight Watchers, AllTrails, Instacart, and Peloton, to get tailored responses, lab test insights, nutrition advice, personalized meal ideas, and suggested workout classes.

The new feature is rolling out for users with ChatGPT Free, Go, Plus, and Pro plans outside of the European Economic Area, Switzerland, and the U.K.

“ChatGPT Health builds on the strong privacy, security, and data controls across ChatGPT with additional, layered protections designed specifically for health — including purpose-built encryption and isolation to keep health conversations protected and compartmentalized,” OpenAI said in a statement.

Stating that over 230 million people globally ask health and wellness-related questions on the platform every week, OpenAI emphasized that the tool is designed to support medical care, not replace it or be used as a substitute for diagnosis or treatment.

The company also highlighted the various privacy and security features built into the Health experience –

- Health operates in silo with enhanced privacy and its own memory to safeguard sensitive data using “purpose-built” encryption and isolation

- Conversations in Health are not used to train OpenAI’s foundation models

- Users who attempt to have a health-related conversation in ChatGPT are prompted to switch over to Health for additional protections

- Health information and memories is not used to contextualize non-Health chats

- Conversations outside of Health cannot access files, conversations, or memories created within Health

- Apps can only connect with users’ health data with their explicit permission, even if they’re already connected to ChatGPT for conversations outside of Health

- All apps available in Health are required to meet OpenAI’s privacy and security requirements, such as collecting only the minimum data needed, and undergo additional security review for them to be included in Health

Furthermore, OpenAI pointed out that it has evaluated the model that powers Health against clinical standards using HealthBench, a benchmark the company revealed in May 2025 as a way to better measure the capabilities of AI systems for health, putting safety, clarity, and escalation of care in focus.

“This evaluation-driven approach helps ensure the model performs well on the tasks people actually need help with, including explaining lab results in accessible language, preparing questions for an appointment, interpreting data from wearables and wellness apps, and summarizing care instructions,” it added.

OpenAI’s announcement follows an investigation from The Guardian that found Google AI Overviews to be providing false and misleading health information. OpenAI and Character.AI are also facing several lawsuits claiming their tools drove people to suicide and harmful delusions after confiding in them. A report published by SFGate earlier this week detailed how a 19-year-old died of a drug overdose after trusting ChatGPT for medical advice.